Creating a User Interface for a Virtual Reality application that allows physiotherapists to observe the patient’s actions in VR while also influencing the virtual enviroment.

Observing users’ actions in Virtual Reality is essential not only for evaluating the VR application but also allows observers to take part in the experience. This is not only relevant for entertainment but also in a professional setting where VR is used for therapy purposes.

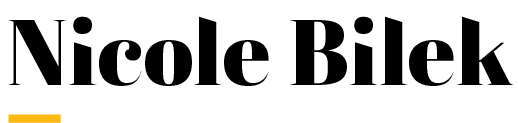

To learn about users’ needs interviews with experts in the field of physiotherapy and gait analysis have been conducted. The findings of the interviews were then re-formulated into User Stories to make sure the final UI adheres to the users’ requirements.

In the empirical part of the master thesis, a functional prototype was created and tested to validate the requirements of the experts and to evaluate different ways of implementing these requirements.

Clustering the findings of the interviews to formulate the User Stories:

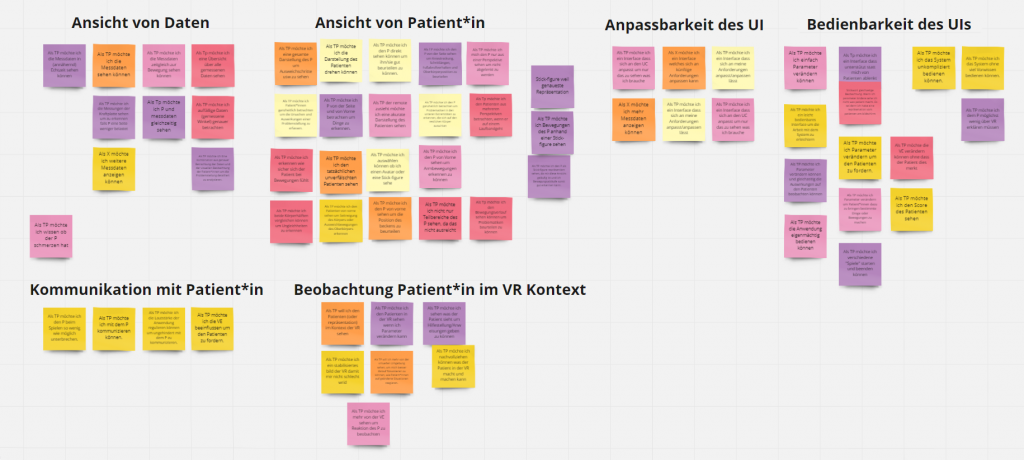

In order to create the layout, different variants were first sketched on paper to plan the arrangement of the elements and to bring the individual areas into a visual hierarchy. The layouts differed mainly in the proportions and proximity of the individual elements.

Since the target platform was not yet known at that time, the layout was designed to fit both computer and tablet.

User Stories define what needs to be implemented in an application and why. They usually don’t included how something should be done. To find the best how to fulfill the User Stories and therefore fulfill the needs of the target group, I worked on different versions of the UI areas mentioned above.

In the next step, different variations of the layout were converted into an interactive prototype in Adobe XD.

Adobe XD’s features were not sufficient to test the other areas of the UI which should allow users to view the virtual environment. Therefore another prototype has been built in Unity 3D.

Of all layouts, only 3 were selected for implementation in Unity. These layouts were the ones that were most adaptable to potential changing needs for future development of the application.

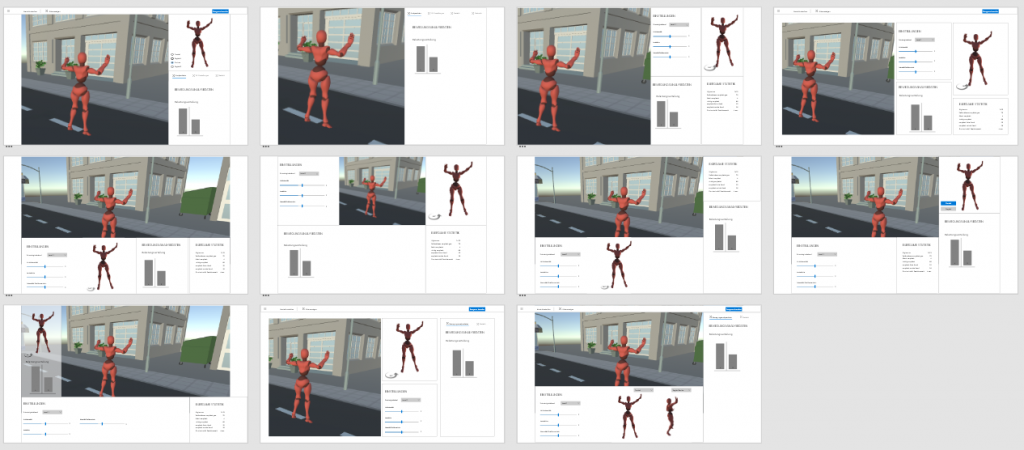

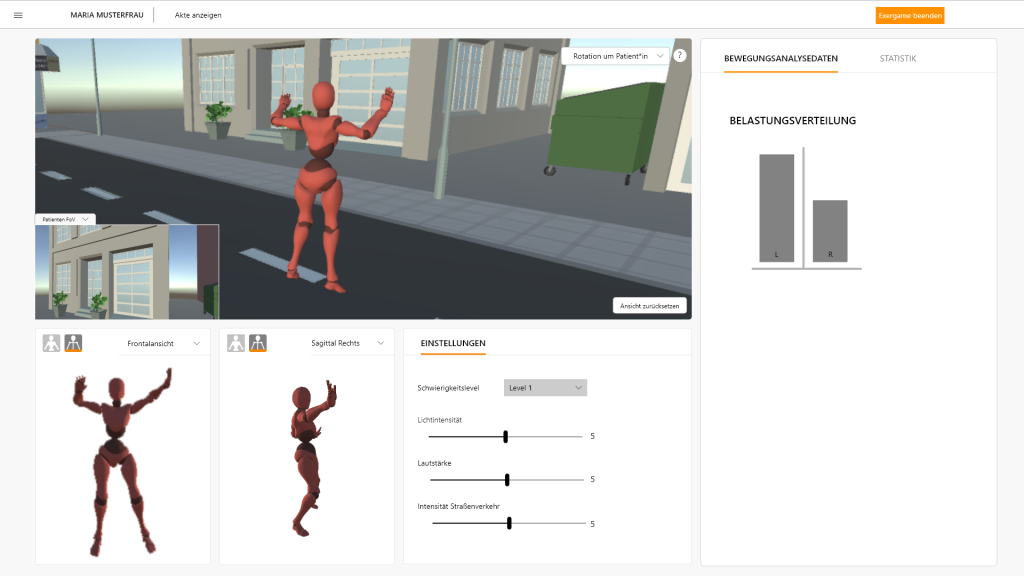

I implemented different virtual cameras in a scene in Unity that allow the observer to interactively view the environment and the VR user that moves within the environment.

In the User Interface, therapists can select the different virtual cameras via drop down menu.

If the patient’s motion is tracked via Motion Tracking, his/her movements can be displayed by an avatar. Implementing this avatar helps the therapist to always keep sight on the patient, even when looking at the screen when changing parameters or observing tracked gait data.

The user tests were conducted remotely (due to COVID-19). The prototype was evaluated via questionnaire that was based upon the User Stories. The goal was to find the variants that were best suited to fulfilled the User Stories and therefore the users’ needs.

During the test, subjects were asked to describe what they see in the UI and were then given two tasks:

The observation of the participants during the tests and the analysis of the questionnaire revealed the most suitable variants of the interface. They were then combined and redesigned. The final version is shown below.

The interviews and user tests revealed that the requirements for a UI that supports therapists using VR depend heavily on the goal of the therapy and the used exergame*.

*Computer games that encourage physical movement

What we can say is that areas that impact each other need to be visible simultaneously. Changing what VR users see, influences their movement and therefore the tracked motion data. Displaying these areas at the same time is necessary. If no motion data is tracked, displaying the other information is unnecessary. The UI needs to be adapted to fit the use case.

The created User Interface is meant to complement the VR application of the ReMoCap-Lab at the UAS St. Pölten. For more info about the project, feel free to visit https://research.fhstp.ac.at/projekte/remocap-lab.